Understanding Decision Trees: A Flexible and Interpretable Machine Learning Method

🌳 Understanding Decision Trees: A Flexible and Interpretable Machine Learning Method

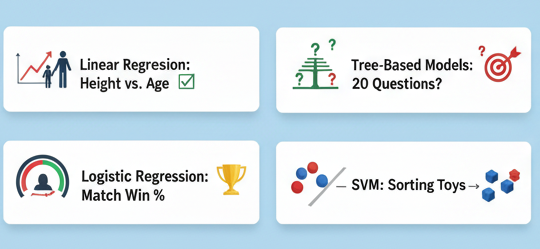

Decision trees are among the most popular machine-learning models because they offer both simplicity and power. They handle complex classification tasks while remaining easy to interpret—making them a natural next step after linear classifiers.

1. How Decision Trees Work 🌿

A decision tree makes predictions by repeatedly splitting data based on feature conditions.

• 🌱 The process begins at the root node, where the model checks one feature.

• ✂️ The dataset is divided depending on whether the feature value meets a chosen threshold.

• 🌴 Each split leads to new branches with additional conditions.

• 🍃 Eventually, the model reaches a leaf node, which assigns the final class label.

This step-by-step structure allows anyone to trace exactly how a prediction was made.

2. Visualizing the Splits 🔍

Decision trees can also be viewed as dividing the feature space into binary regions.

• ➗ Each split separates the data into two parts.

• 🔁 These divisions continue recursively, forming increasingly specific groups.

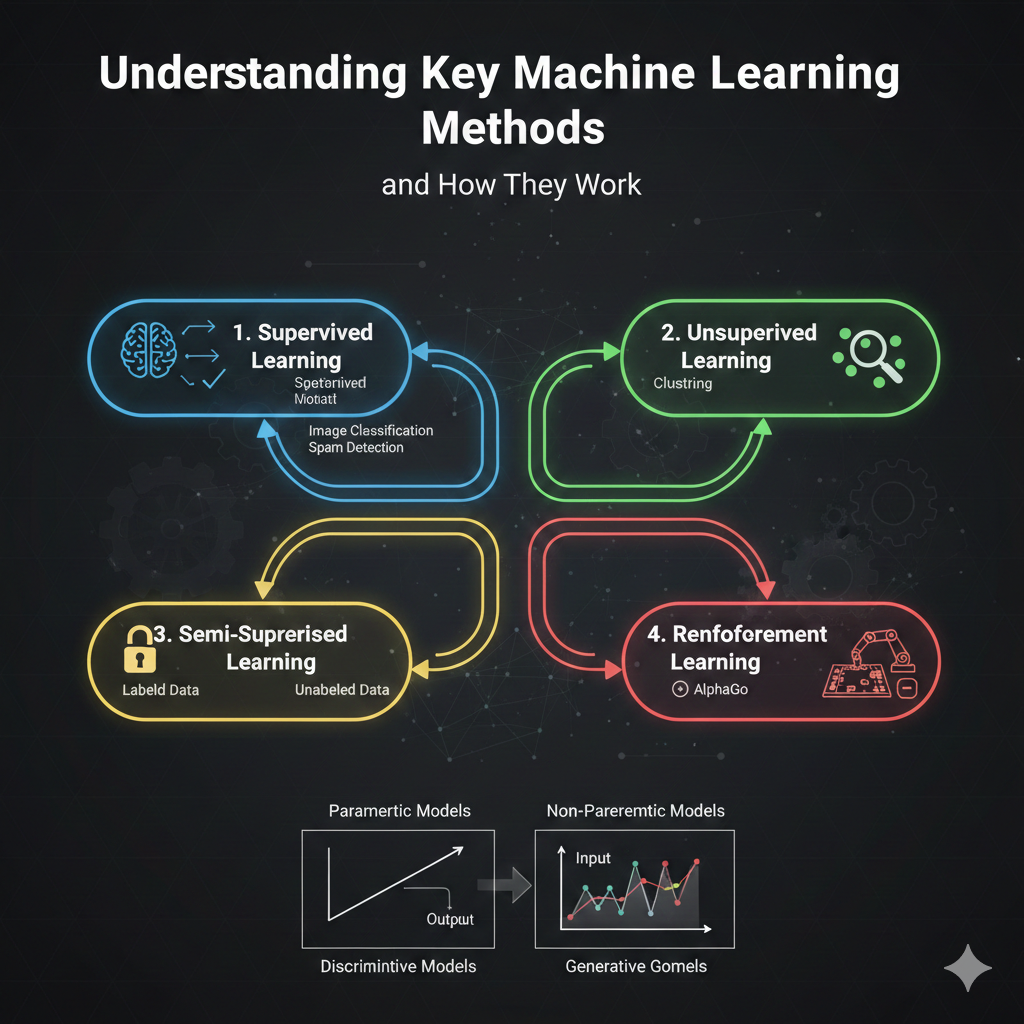

• 📊 Because the number of splits is based on the data—not a fixed formula—decision trees are considered non-parametric models.

This adaptability helps them recognize a wide variety of patterns.

3. Predicting Probabilities 🎯

Decision trees don’t just output labels—they can also estimate probabilities.

• 📦 Leaf nodes contain multiple samples.

• 📉 The fraction of samples in each class becomes the model’s confidence score.

This adds nuance to predictions, especially in uncertain scenarios.

4. Extending Decision Trees: Bagging and Boosting 🚀

Although powerful, a single decision tree can be sensitive to small changes in the data. To improve stability and accuracy, advanced techniques are used:

• 🧺 Bagging (Bootstrap Aggregation): Builds many trees on random subsets of data and averages their predictions.

• 🔥 Boosting: Trains trees sequentially, where each tree corrects the errors of the previous one.

Random forests and gradient boosting machines are popular examples of these ensembles.

🌟 Conclusion

Decision trees remain essential in machine learning because they are adaptable, transparent, and effective across many real-world applications. Their interpretability and ability to scale with more advanced methods make them a reliable foundation in AI development.

See more blogs

You can all the articles below

.png)