Understanding the Dimensions of LLMs: A Practical Guide for Builders and Businesses

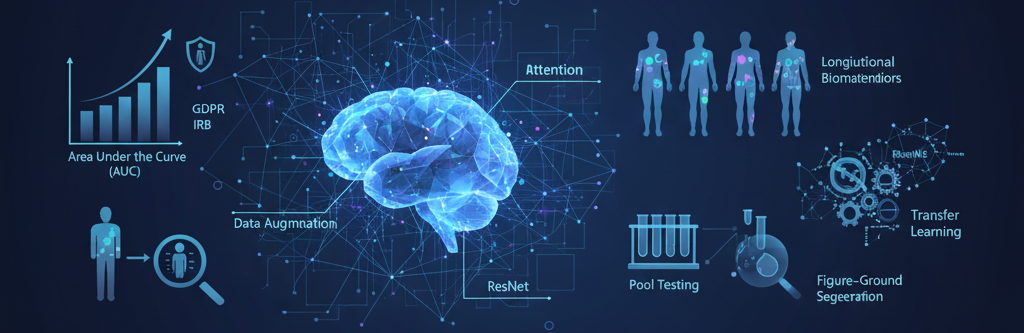

As the world embraces the capabilities of large language models (LLMs), understanding their structure is no longer just for researchers — it’s essential for product builders, business leaders, and AI adopters alike. At LLMSoftware.com, we’re committed to helping teams leverage LLMs intelligently and responsibly. A powerful visual shared online offers a clear breakdown of the many layers involved in LLM development and deployment. Here's our take on how these dimensions shape modern AI solutions.

1. Pre-Training: Foundation of Intelligence

LLMs begin with pre-training on vast datasets. This phase creates the raw capabilities of the model.

- Single-Modal Models like GPT-3, PaLM, or LLaMA are trained on text data.

- Multi-Modal Models such as GPT-4, Gemini, and PaLM-E integrate vision, text, and more — enabling image captioning, document analysis, and multi-input tasks.

🧠 Why it matters: Choosing between single- and multi-modal models depends on your use case. Are you dealing with just text, or do you need image, speech, or sensor data?

2. Fine-Tuning: Making Models Useful

After pre-training, models are fine-tuned for specific tasks or domains.

- Transfer Learning leverages large pre-trained models and adapts them.

- Instruction Tuning — either manual or automated — guides models to follow human prompts more precisely.

- Alignment ensures safety and usefulness (e.g., via RLHF or DPO).

- PEFT (Parameter-Efficient Fine-Tuning) methods like LoRA and Adapters reduce compute needs.

- Quantization and Pruning improve model efficiency and reduce size — essential for on-device or edge deployment.

⚙️ Takeaway: Fine-tuning enables domain-specific applications — from legal document analysis to customer service bots.

3. Efficiency: Evaluating Performance and Cost

Running LLMs efficiently involves balancing accuracy, safety, and cost.

- NLG (Natural Language Generation) and NLU (Understanding) tasks include summarization, sentiment analysis, translation, and reasoning.

- Evaluation Metrics go beyond accuracy — they include bias, memorization, toxicity, and cost.

- Alignment and Dataset Quality play a key role in trust and usability.

💰 Why you should care: Efficient deployment can make or break your AI ROI. Think about GPU costs, memory limits, and alignment safety before you scale.

4. Inference: Real-Time Interaction

Inference is where LLMs come to life in applications.

- Prompting allows for instruction-based behavior, whether it’s a chatbot or code generator.

- Text Completion, Chain-of-Thought, In-Context Learning (ICL), and Tree-of-Thought (ToT) enhance reasoning and planning.

🤖 Pro tip: Smart prompting strategies can dramatically improve your outputs — even without additional fine-tuning.

5. Applications: Industry-Driven Impact

LLMs are being used in nearly every industry:

- General Purpose: Chatbots, summarization, virtual assistants

- Medical & Education: Diagnosis support, tutoring

- Finance & Law: Contract analysis, fraud detection

- Science & Robotics: Research insights, autonomous systems

🚀 LLMSoftware.com Advantage: We help you plug LLMs into your stack — integrating with tools like QuickBooks, DocuSign, HubSpot, and Make.com — with structured workflows and compliance.

Final Thoughts

The landscape of LLMs is vast, but with the right understanding of each dimension — from pre-training to applications — businesses can unlock massive value.

At LLMSoftware.com, our platform simplifies this complexity so you can focus on outcomes, not infrastructure. Whether you're building your first AI tool or scaling an enterprise solution, we provide the building blocks and expert guidance you need.

📩 Ready to build with LLMs? Contact us today or sign up for early access.

See more blogs

You can all the articles below

.png)