Unlocking the Language of AI: Key Terms You Need to Know

Artificial intelligence (AI) is revolutionizing our world—but if you’re not immersed in the field, the sheer volume of technical jargon can feel overwhelming. Whether you’re a student, a professional, or simply an enthusiast eager to understand more, a solid grasp of foundational AI terms makes all the difference.

Here’s a curated walkthrough of vital concepts that demystify AI, machine learning, neural networks, and more, based on the comprehensive glossary from MIT xPRO.

Foundational AI Concepts

- Artificial Intelligence (AI): The science of making computers perform tasks that typically require human intelligence, such as problem-solving, pattern recognition, and learning.

- Artificial General Intelligence (AGI): The hypothetical stage where AI matches or exceeds human intelligence across an unrestricted range of tasks.

- Machine Learning: A subset of AI where algorithms learn patterns from data to make predictions or decisions without being explicitly programmed.

- Deep Learning: Machine learning methods using multi-layered neural networks capable of learning data representations automatically and hierarchically.

Neural Networks and Their Building Blocks

- Artificial Neural Network (ANN): Computer systems inspired by the brain, using connected ‘neurons’ to process information.

- Activation Function / Activation Layer: Introduces nonlinearity into the network, allowing it to learn complex patterns—common examples include ReLU, sigmoid, and tanh.

- Input, Hidden, and Output Layers: The input layer receives data, hidden layers transform it, and the output layer provides predictions or classifications.

- Weights: Adjustable values that determine the strength of connections between ‘neurons’—crucial for learning during training.

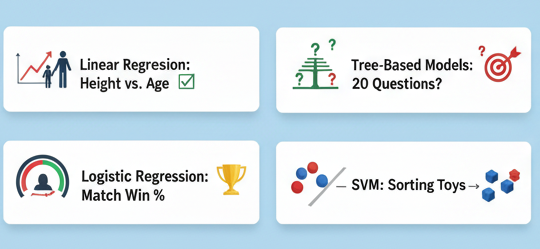

Key Machine Learning Terms

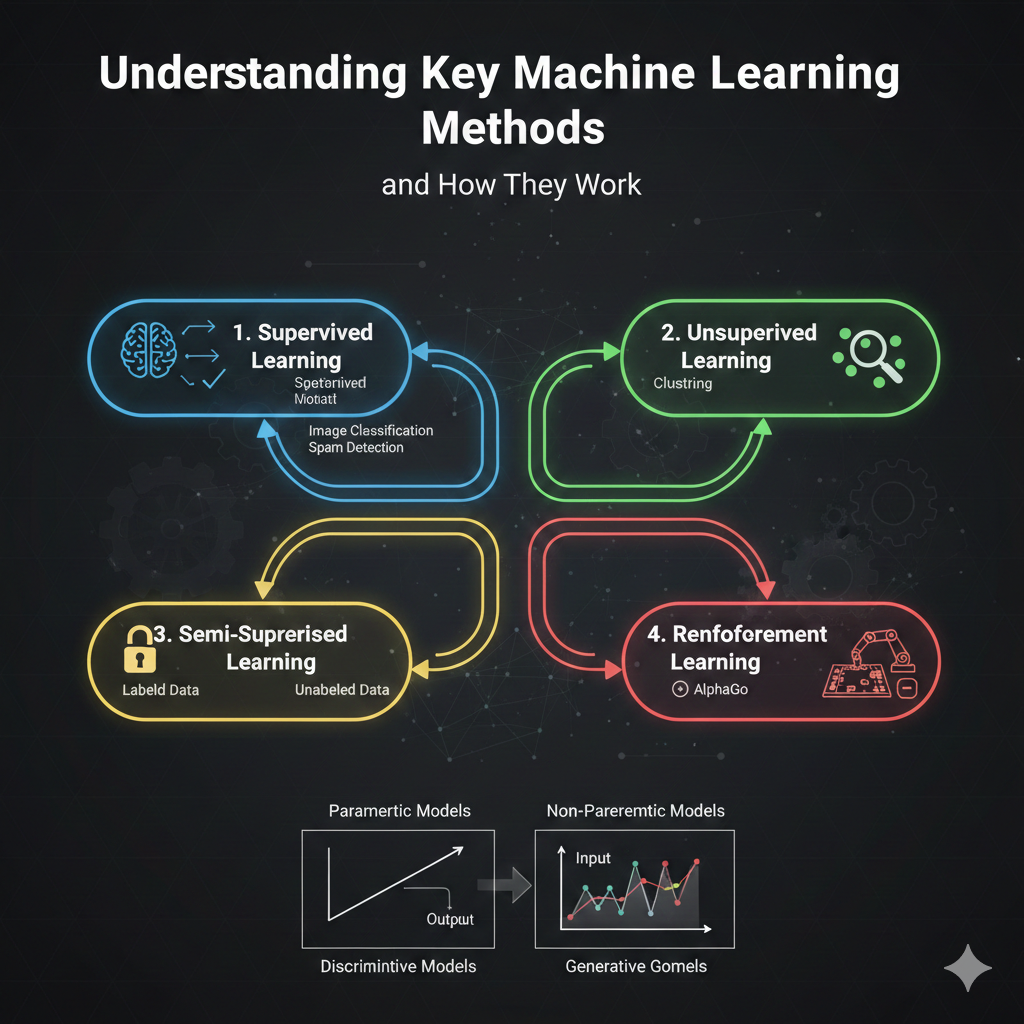

- Supervised Learning: Models learn from labeled data, mapping inputs to known outputs.

- Unsupervised Learning: Models find patterns and structures in data without labeled outputs.

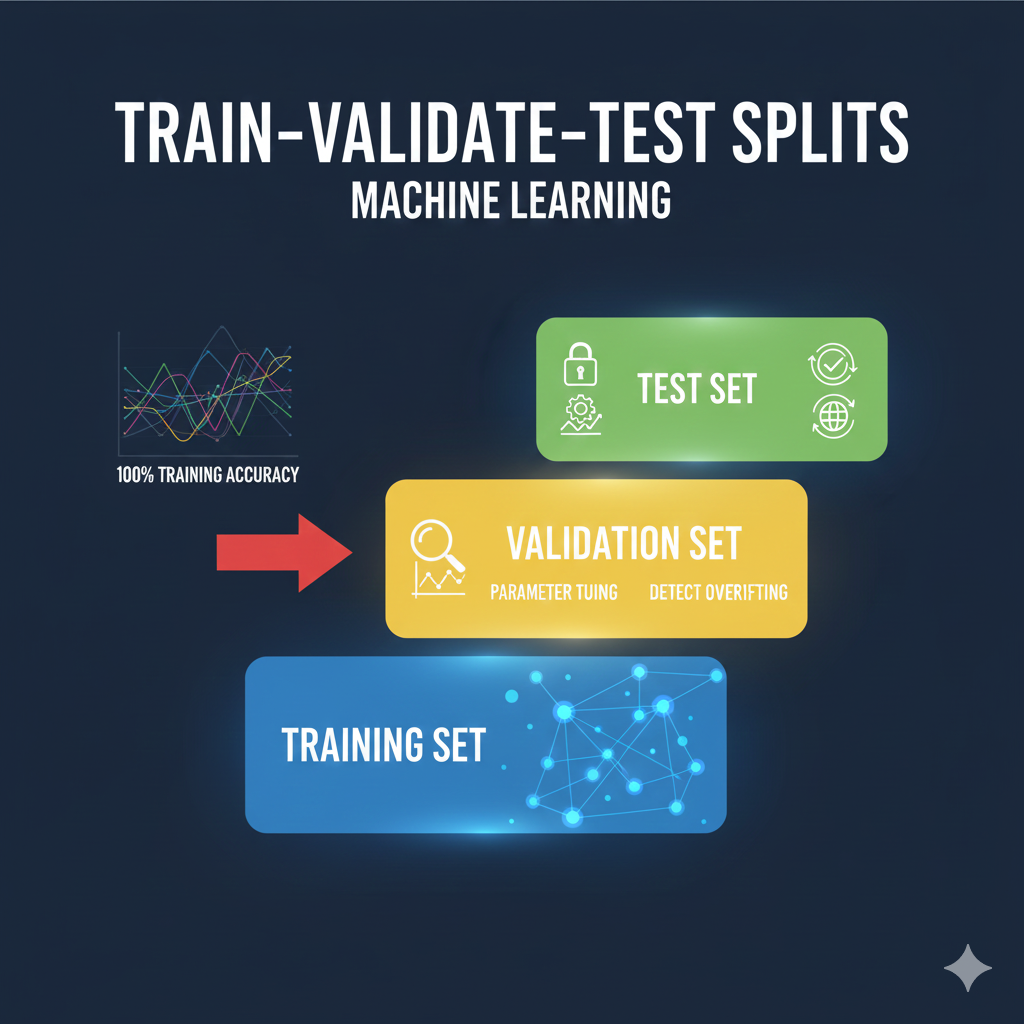

- Overfitting: When a model learns noise from the training data, failing to generalize to new inputs. Regularization techniques combat this.

- Loss Function: Quantifies the error between predictions and actual results, guiding how the model learns.

Training and Optimization

- Gradient Descent: The core optimization algorithm that iteratively adjusts model parameters to minimize loss.

- Backpropagation: The method by which neural networks adjust their weights via gradients computed from prediction errors.

- Batch Normalization: A layer that normalizes inputs, speeding up training and improving stability.

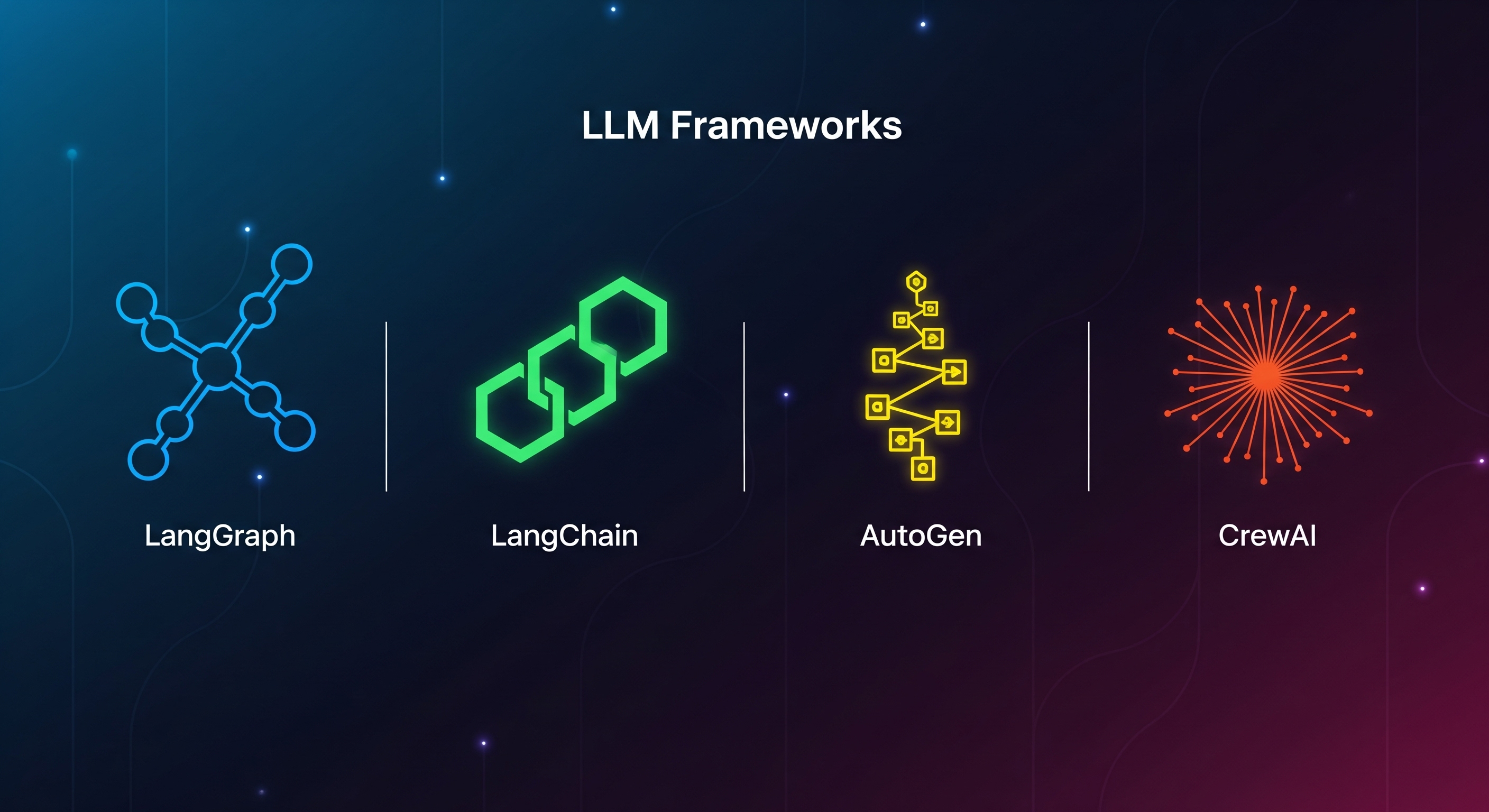

Popular AI and ML Architectures

- Convolutional Neural Network (CNN): Designed for processing images, recognizing edges, shapes, and objects through layered convolutions.

- Recurrent Neural Network (RNN): Handles sequential data, important for time series and natural language tasks.

- Generative Adversarial Networks (GANs): Two neural nets—generator and discriminator—compete to create realistic synthetic data.

Model Evaluation and Improvement

- Hyperparameters: Settings configured before training (e.g., learning rate, number of layers) that dramatically affect model performance.

- Validation / Testing / Benchmarks: Essential steps to measure how well a trained model generalizes to unseen data, avoiding pitfalls like overfitting.

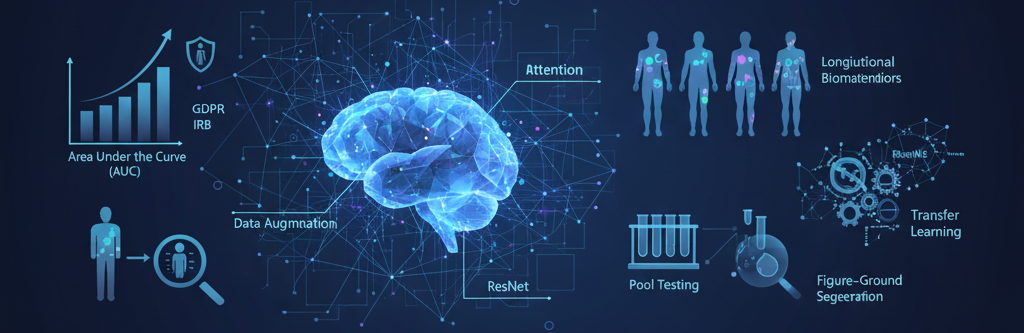

- Area Under the Curve (AUC): A metric that evaluates classification model performance based on the ROC curve.

Challenges and Considerations

- Algorithmic Bias: The risk of AI reflecting pre-existing prejudices in data or design; ongoing bias remediation is crucial.

- Explainability: The need for AI systems to provide clear, understandable reasons for their outcomes.

- Adversarial Attacks: Techniques designed to fool AI models, often by subtly altering input data.

Emerging Topics

- Transfer Learning: Using knowledge learned from one task to boost performance on related new tasks.

- Prompt Engineering: Crafting effective prompts to get optimal replies from foundation models like GPT.

- Digital Twins & Augmented Reality (AR): AI isn’t just about software—virtual representations and digital overlays are transforming physical spaces, too.

Wrap-Up

AI’s rapid growth means its vocabulary is expanding and evolving. Having a glossary at hand isn’t just useful—it’s indispensable as you dive deeper into this transformative field. Whether you’re tinkering with neural networks, curious about GPT, or pondering how AI will shape the future, these terms are your starting compass on the AI journey.

Stay curious and keep learning!

See more blogs

You can all the articles below

.png)