🚀 Mastering Large Language Models (LLMs) in 2025: The Ultimate Cheat Sheet

The world of AI is evolving fast, and at the heart of this transformation are Large Language Models (LLMs). Whether you're a developer, researcher, or simply an AI enthusiast, understanding LLMs is crucial in 2025. Here's a compact, visual guide that breaks down everything you need to know—from core concepts to practical tools.

🧠 What is an LLM?

A Large Language Model is a type of neural network trained on massive text corpora. It can generate, understand, translate, and reason using human-like language. LLMs are primarily based on the Transformer architecture and utilize techniques like self-attention and autoregression.

🔑 Core Concepts You Must Know

- Tokens & Embeddings: Converts raw text into tokens and embeds them into vector space.

- Positional Encoding: Adds order information to word embeddings.

- Transformer Blocks: The backbone—made up of attention and feedforward layers.

- Context Window: Defines how much text the model can process at once.

- Attention Mechanism: Understands word relationships regardless of position.

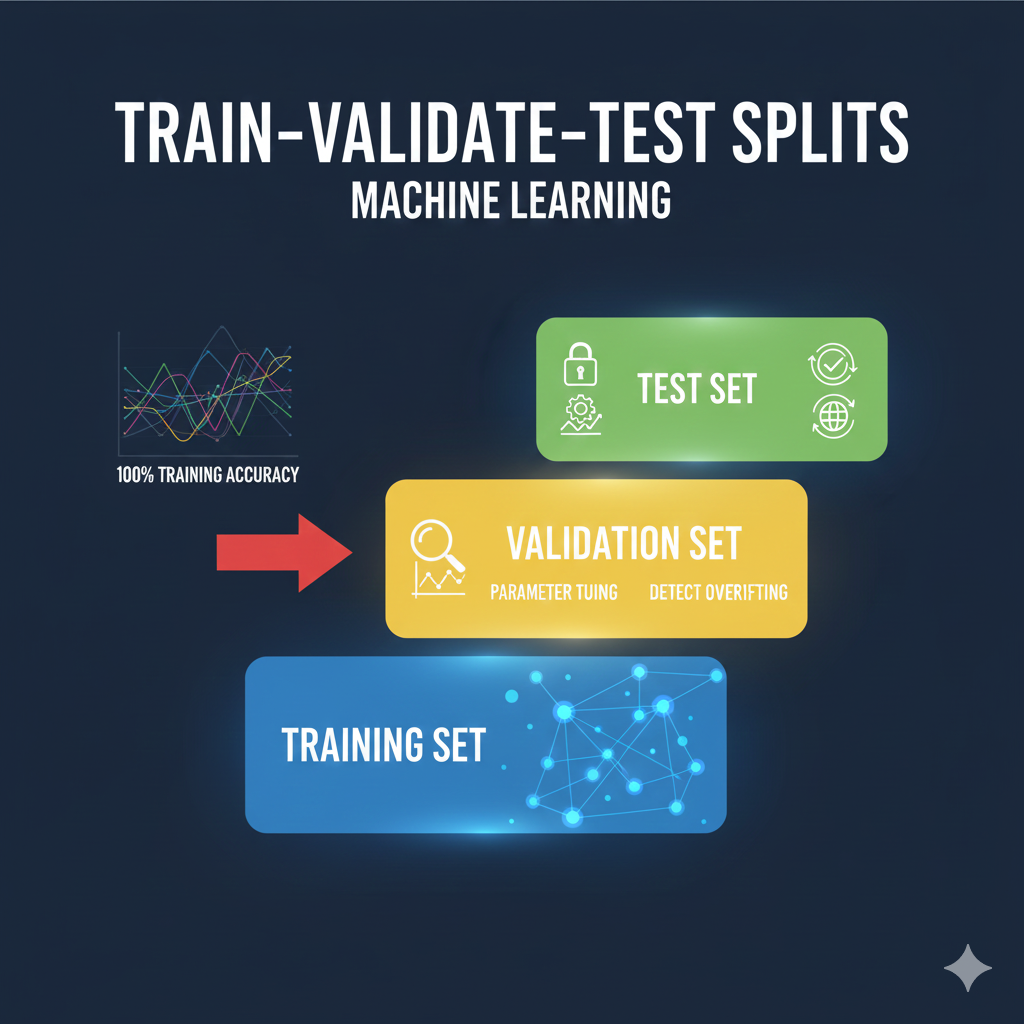

- Self-Supervised Learning: The model predicts parts of input from itself.

- Causal vs. Masked Modeling: GPT uses causal (next-token), BERT uses masked (fill-in-the-blank).

🛣 Roadmap to Master LLMs

- Learn Python, NumPy, Pandas, and basic math for ML.

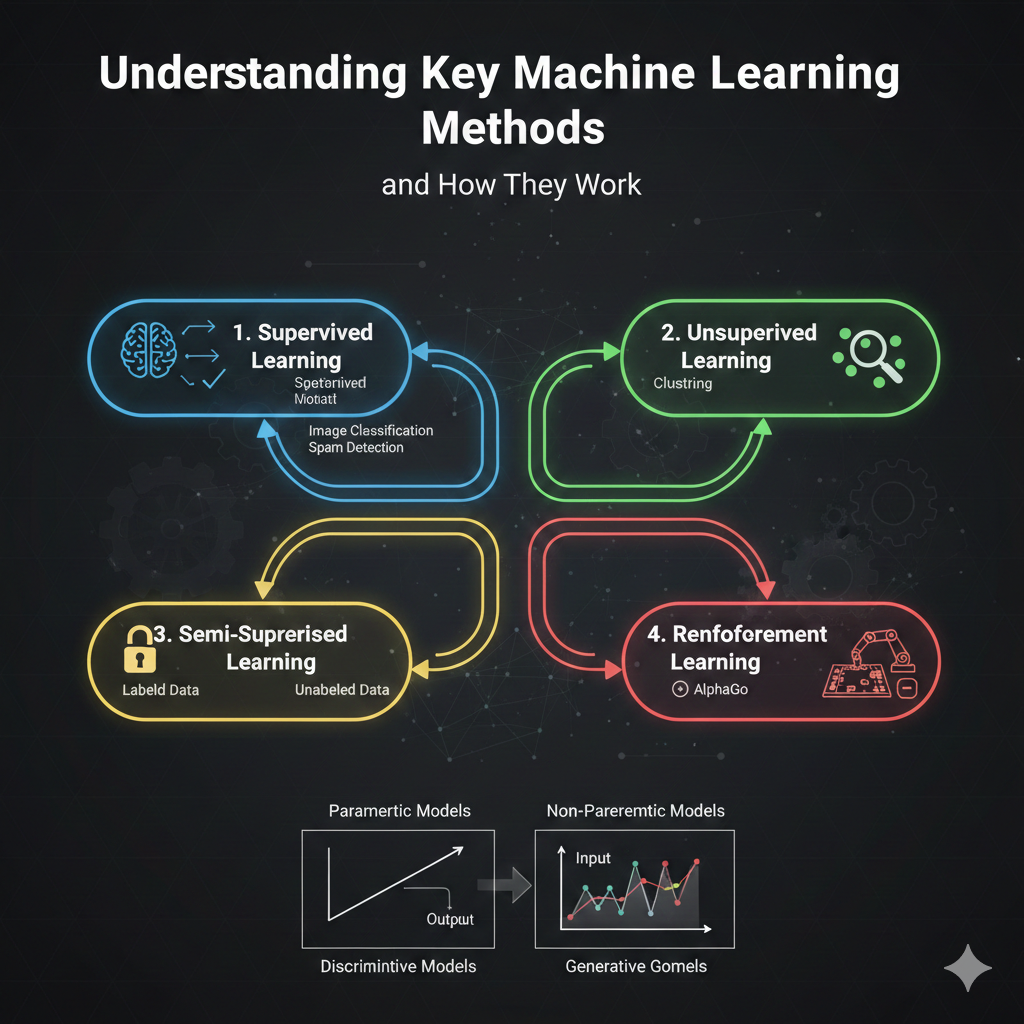

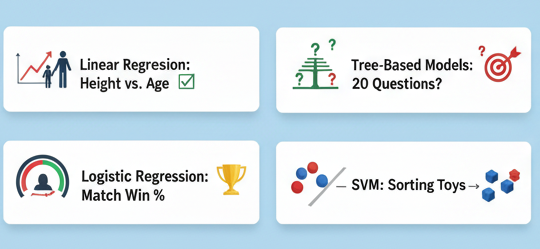

- Understand classical ML/DL concepts like CNNs and logistic regression.

- Dive deep into Transformers.

- Get hands-on with PyTorch & Hugging Face.

- Practice prompt engineering (zero-shot, few-shot, CoT).

- Fine-tune using LoRA/QLoRA.

- Build RAG pipelines (LangChain + FAISS + LLM).

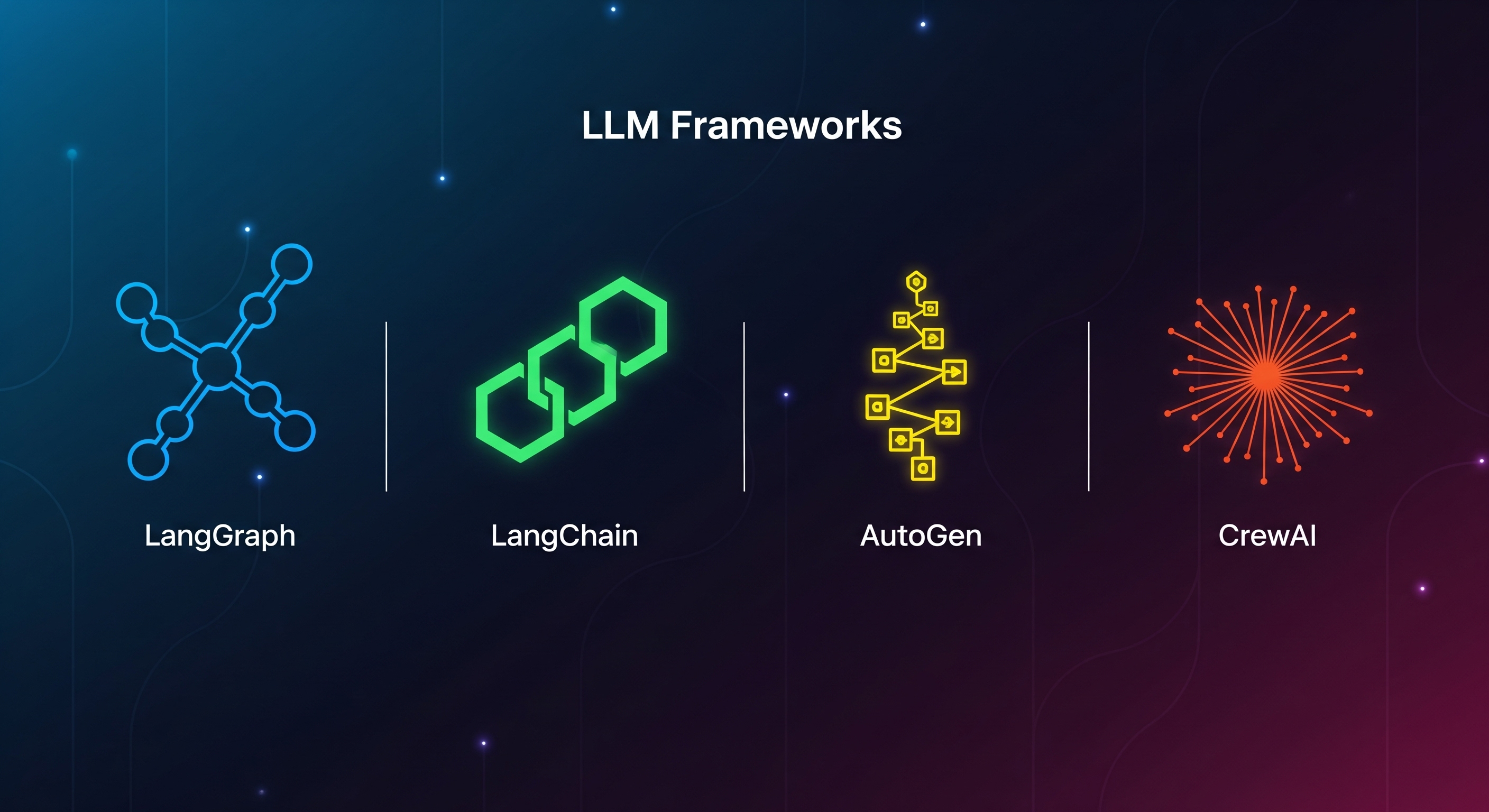

- Explore Agentic AI (LangGraph, CrewAI).

- Deploy using FastAPI, Docker, Hugging Face Spaces.

🧩 Types of LLMs (By Function)

Type Example Purpose

Autoregressive GPT, LLAMA Text generation

Masked Language BERT Classification, NER

Multimodal Gemini, Flamingo Text + Image/Audio/Video

Instruction-Tuned OpenChat, Alpaca User command alignment

MoE (Sparse) Mixtral Select expert layers

Agentic Models AutoGPT, CrewAI Combine reasoning & planning

SLMs Phi, TinyLLAMA Efficient use on limited compute

🧰 LLM Tech Stack to Learn

- Modeling: PyTorch, TensorFlow, Hugging Face

- Prompting: LangChain, PromptLayer

- Retrieval: FAISS, Weaviate, ChromaDB

- Fine-Tuning: LoRA, QLoRA, PEFT, FlashAttention

- Deployment: FastAPI, Docker, Streamlit

- Monitoring: Helicone, Weights & Biases

- Agents: CrewAI, AutoGen, LangGraph

📏 Evaluation & Metrics

- Perplexity: Predictive confidence

- BLEU / ROUGE: Match with references

- F1 Score: Classification accuracy

- Toxicity & Bias: Ethical checks

- HumanEval / MMLU: Real-world reasoning

🔥 Top Open-Source LLMs to Explore (2025 Edition)

- LLaMA 3 (Meta) – General-purpose, state-of-the-art

- Mistral / Mixtral – Open-weight SOTA models

- Gemma (Google) – Lightweight, highly efficient

- DeepSeek / DeepSeek-Coder – Code and general language

- OpenChat / Zephyr – RLHF-aligned chatbots

- Phi-3 (Microsoft) – Tiny model, high performance

⚙️ Bonus: LLM Workflows

- Pretraining → Fine-Tuning → Evaluation → Deployment

- RAG: Query → Embed → Retrieve → Rerank → Generate

- Agents: Task → Decompose → Tool Use → Memory → Update → Output

📌 Whether you're building intelligent agents or just getting started with prompt engineering, this cheat sheet offers a practical and visual roadmap to mastering LLMs in 2025.

See more blogs

You can all the articles below

.png)